Words fail me on this one (well, not really). How incomprehensible is it? Let me count the ways.

First off, we’re confronted with the somewhat cryptic information that we’re seeing the “cosmos” at a distance of 450 million light years. Mmmm hmmm. Then we have a color bar at the bottom, sans units, that tells us that the values range from –1.0 something to 4.3 something. Other labels appear scattered pell-mell across the image… “Fur-For”? “Per-Peg”? What is an Average Jane to make of these? (They’re short for “Further Fornax” and “Perseus-Pegasus,” BTW. I’ll even make the obnoxious grammarian observation that it should be “Farther Fornax” if anything.) And to add insult to injury, the red typeface blends right into the color scheme of the plot!

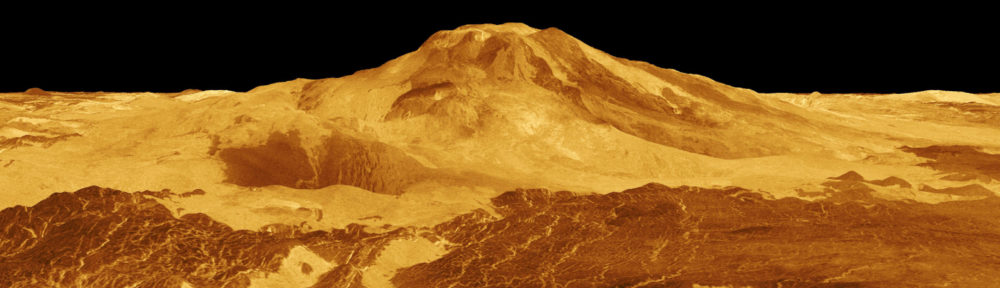

What saddens me most about this image is that it represents a spectacular result poorly communicated. What you’re looking at, FYI, is a color-coded representation of matter density within a thin sliver of the Universe at an approximate distance of (you guessed it) 450 million light years. The press release correctly identifies this as “the largest full-sky, three-dimensional survey of galaxies ever conducted” (based on 2MASS data, just so you know). How kewl is that? You wouldn’t know from this picture.

(If I were petty, I would note that the headings on the images don’t even match the captions provided in the press release from the Royal Astronomical Society, at least as it was emailed to me. For example, the caption for the image above reads, “The reconstructed density field, evaluated on a thin shell… at 45 million light years. The main overdensity, Shapley, is shown in red and green. Other overdensities are Rixos F231\526 (RIX), SC44, C19, Pisces (Pis), Perseus-Pegasus (Per-Peg), C25, C26, Hercules (Her), Abell S0757, C28, C29, C30, SC 43, Leo, Abell 3376, C27 and C21. The voids (blue) are V4, Further-Fornax (Fur-For) and V15.” Emphasis mine. But I’m not petty. Oh, and the picture is correctly labelled; the caption is wrong.)

The academic paper presents the same images as black-and-white contour plots, so I can only assume that the researchers believed themselves to be translating their results into user-friendly format simply by adding garish color.

Allow to digress for a moment to explain why I see this as such an egregious mistake. My two-bit definition of science visualization goes something like this… We make images from data (i.e., ones and zeros) for essentially one of three purposes: 1) communication to oneself in the form of data analysis, 2) communication to peers, often in the form of a graph or contour plot, and 3) communication to a general audience. The first two purposes depend heavily on well-developed visual language—for example, knowing what variables you’re plotting against one another, knowing what color-coding means, etc.—that often tend to be very specialized. Those of us who have been reading cartesian coordinates for most of our lives forget what it’s like not to be exposed to that visual vocabulary on a day-to-day basis. Needless to say, scientists tend to communicate via an extremely sophisticated visual language (that furthermore varies from discipline to discipline). The biggest problem occurs when scientists try to make the leap to the third form of visualization—communicating to the general public. It’s difficult to translate their complex visual language into a visual vernacular. (More details on my “personal paradigm” are available as part of my “What Is Viz?” PowerPoint, but be warned that you need to read the comments for each slide; otherwise, it’s just a bunch of pictures.)

Thus, the fundamental issue I see here can be likened to a mistranslation. The image above uses a very specific vocabulary (e.g., false color, Aitoff projection of a sphere onto a plane) to describe a small part of the Universe. Without attempting to translate the truly challenging aspects of the image, the presentation of candy-colored data sans specific quantifying information in fact results in a terribly confusing message.

Oddly enough, we have played with an earlier version of the 2MASS data as part of the Hayden Planetarium Digital Universe, so I’ve seen (in 3-D) the galaxy data upon which this is based. The data certainly lend themselves to a 3-D representation, and I can image a fly-through in which the matter distribution is represented by 3-D isosurfaces, or if a lot of rendering time were at one’s disposal, by volumetric rendering (similar to the manner in which some dark matter simulations are depicted.