Like many research organizations, the Royal Society has a “Picture of the Month” that it displays on its web page. This month, they reproduce the image above, a 19th-century lithograph based on a 17th-century oil by Roelandt Savery. I would like to draw your attention to a critical attribute of this lithograph: it sucks. Particularly if you have no idea what a dodo looks like.

Comparing the above image to Savery’s most famous oil of a dodo, it seems as though the 19th-century copyist (somebody named “Erxleben”) may simply have lacked talent. Admittedly, the caption on the web site indicates that it merely reproduces “a small detail” of the lithograph, but even at that, it’s hard to take the person’s skill seriously.

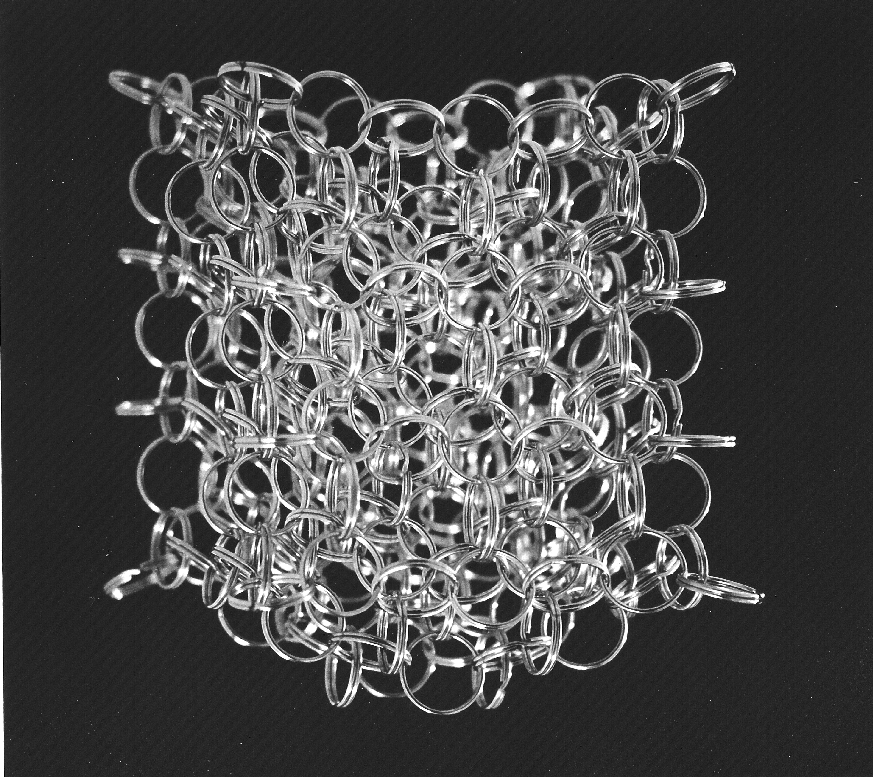

I choose this image to highlight how far we’ve come. Three-dimensional computer reconstructions and digital images from spacecraft a billion miles from home are only the tip of the iceberg! We have a plethora of techniques to take scientific data and transform them into pictures. But the work started with scientists and artists putting pencil to paper, or brush to canvas, or crayon to limestone,… Photography, of course, only started in the middle of the 19th Century, and digital imaging techniques are thirty-some-odd-years old.

What we now take for granted is the fidelity of a representation to its sources. Specialists might quibble over the use of color or the “fixing” of errors such as bad pixels, but fundamentally, we all think of contemporary visualizations as accurate in a way that few drawings or paintings, even those executed by gifted artists, could ever hope to be. When you couple that inherent limitation with the potentially incompetent skills of a secondary or tertiary artist such as, say, Erxleben, then you quickly see how successive copies of a work used to grow worse over time. (For another good example, compare Galileo’s orginal delicate watercolors of moon phases with the respectable engravings he commissioned for Siderius Nuncius as well as the mediocre woodcuts that appeared in a knock-off, unauthorized printing of the same.) We no longer need to worry about such things.

Of course, there are plenty of other things for science visualizers to worry about (or at least consider and mull over). That’s why I started this blog, after all…